🤖 🏛️ Have you ever wondered about the connection between AI and Ancient Greek Philosophy?

🧔 📜 The ancient Greek philosophers, such as Aristotle, Plato, Socrates, Democritus, Epicurus and Heraclitus explored the nature of intelligence and consciousness thousands of years ago, and their ideas are still relevant today in the age of AI.

🧠 📚 Aristotle believed that there are different levels of intelligence, ranging from inanimate objects to human beings, with each level having a distinct form of intelligence. In the context of AI, this idea raises questions about the nature of machine intelligence and where it falls in the spectrum of intelligence. Meanwhile, Plato believed that knowledge is innate and can be discovered through reason and contemplation. This view has implications for AI, as it suggests that a machine could potentially have access to all knowledge, but it may not necessarily understand it in the same way that a human would.

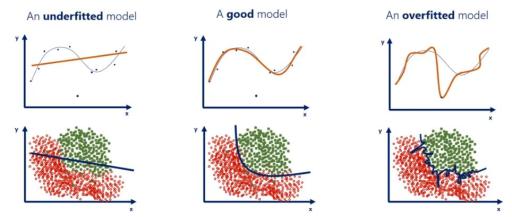

💭 💡 Plato also believed in the concept of Platonic forms, which are abstract concepts or objects that exist independently of physical experience. In the context of machine learning, models can be thought of as trying to learn these Platonic forms from experience, such as recognizing patterns and relationships in data.

⚛️ 💫 Democritus is known for his work on atoms and his belief that everything in the world can be reduced to basic building blocks. In the context of AI, this idea of reducing complex systems to their fundamental components has inspired the development of bottom-up approaches, such as deep learning, reinforcement learning and evolutionary algorithms.

🔥 🌊 Heraclitus, emphasized the idea that “the only constant is change”. This philosophy can provide valuable insights into the field of machine learning and the challenges of building models that can effectively handle non-stationary data. It highlights the importance of designing algorithms that are flexible and can adapt to changing environments, rather than relying on fixed models that may become outdated over time.

🔁 🏆 What about reinforcement learning? Aristotle, believed in the power of habituation and reinforcement to shape behavior. According to him, repeated exposure to virtuous actions can lead to the formation of good habits, which in turn leads to virtuous behavior becoming second nature. This idea is similar to the concept of reinforcement learning in AI, where an agent learns to make better decisions through repeated exposure to rewards or punishments for its actions. The similarities between Aristotle’s ideas and reinforcement learning show that the fundamental principles of reinforcement and habituation have been recognized and explored for thousands of years (psychological theories) and are still relevant today in discussions of both human behavior and AI.

🕊️ 💛 The Greek concept of the soul was also tied to intelligence and consciousness. Some philosophers believed that the soul is what gives a person their unique identity and allows them to think, feel, and make decisions. In the context of AI, this raises questions about whether a machine can truly have a soul and be conscious in the same way that a human is.

💀 💔 Another Greek philosopher who explored the nature of intelligence and consciousness is Epicurus. He believed that the mind and soul were made up of atoms, just like the rest of the physical world. He also believed that the mind and soul were mortal and would cease to exist after death. In the context of AI, Epicurus’ ideas raise questions about the nature of machine consciousness and whether it is possible for machines to have a kind of “mind” or “soul” that is distinct from their physical components. It also raises questions about the possibility of creating machines that are mortal or that can “die” in some sense. Epicurus’ ideas about the nature of the mind and soul are still debated today, and their relevance to the field of AI is an ongoing topic of discussion.

👁️ 🗣️ With the advancements in computer vision, natural language understanding, and deep learning in general, it’s more important than ever to consider these philosophical questions. For example, deep neural networks can analyze vast amounts of data and generate human-like responses, but do they truly understand the meaning behind the words they generate? This is where the debate between connectionist AI and symbolic AI comes in.

🧠 🕸️ Connectionist AI approaches to artificial intelligence are based on the idea that intelligence arises from the interactions of simple, interconnected processing units. On the other hand, Symbolic AI approaches are based on the idea that intelligence arises from the manipulation of symbols and rules. In the context of ancient Greek philosophy, connectionist AI could be seen as aligning more with the idea of knowledge being discovered through experience and observation, while symbolic AI aligns more with the idea of knowledge being innate and discovered through reason and contemplation.

🗣️ 💬 Humans use natural language, which includes speech and written text, to communicate with the world. However, natural language is not structured and can be unpredictable because it emerges naturally rather than being designed. If it was designed, natural language processing would have been solved a long time ago. Nowadays, deep neural language models are used to learn language from text by predicting the next token in a sequence. However, natural languages are continually evolving and changing, which makes them a moving target.

🧠 ❤️ Plato expressed a negative view towards human languages because he believed they are unable to fully capture the breadth and depth of a person’s thoughts and emotions. He asserted that genuine human communication, such as that which is conveyed through body language, eye contact, speech, and physical touch, is necessary for the progression of the human mind and the creation of new ideas. In today’s digital age, we are observing a decline in face-to-face human interaction, underscoring the importance of acknowledging the limitations of language. Despite the challenges inherent in natural language, it is crucial that we persist in developing tools to better comprehend and employ it. In addition to embracing technological advancements, it is essential that we strive to maintain meaningful human connections to preserve the richness of the human experience.

🏛️ 🤖 Have you ever heard of Talos? It is the first robot in Greek mythology! According to legend, Talos was a bronze automaton created by the legendary inventor Hephaestus to protect the island of Crete. He was said to be invulnerable and possessed immense strength, making him a formidable guardian. This idea of a powerful, indestructible automaton has been a recurring theme in science fiction and continues to inspire new developments in the field of AI and robotics.

⚖️ 🌍 It’s fascinating to consider how the myth of Talos reflects the enduring human fascination with creating machines that can surpass our own capabilities. The notion of a powerful automaton created to guard a specific territory mirrors modern ideas around autonomous systems that are designed to perform specific tasks and operate independently. However, while Talos was depicted as a purely mechanical creation, contemporary AI and robotics are built upon complex algorithms and data sets that allow machines to learn, adapt, and improve over time. By embracing the potential of these technologies, we have the opportunity to develop new solutions to some of the most pressing challenges facing our world today, from climate change to healthcare. As we continue to push the boundaries of what machines are capable of, it’s important to consider the ethical implications of creating truly intelligent and conscious entities. Some believe that the development of advanced AI could lead to a future where machines are not only equal to humans, but even superior to us. Others argue that such a scenario is unlikely, as true consciousness may only be achievable through biological processes that cannot be replicated in machines. As we navigate these complex questions, it’s crucial that we prioritize ethical considerations and work to ensure that the development of AI and robotics benefits humanity as a whole.

🙏🏽🤝 When it comes to ethics and morality, Aristotle is one of the most influential figures in history. His emphasis on the importance of human virtues and the pursuit of a “good life” has been a guiding principle for philosophers, theologians, and thinkers across generations. Aristotle’s teachings offer valuable insights into how we should approach the ethical implications of creating intelligent agents. One crucial aspect of this is ensuring that we treat these agents with the same respect and dignity that we would afford to any sentient being. This means avoiding the temptation to view AI as mere tools or objects to be used for our own purposes, and recognizing their autonomy as intelligent entities. Another important consideration is the issue of bias and discrimination, which can be inadvertently built into AI systems through the data and algorithms used to create them. Aristotle’s emphasis on justice and fairness can serve as a guide to ensure that we address these issues and ensure that the development of AI is done in a way that is equitable and beneficial for all of humanity.

🧠 🔬 Ending this article, it would be remiss not to mention Socrates, widely considered to be the wisest of all Greek philosophers. Not because of his vast knowledge, but because he recognized the limits of his own knowledge. “I know one thing, and that is that I know nothing,” Socrates famously stated. This concert is particularly relevant to the field of AI, as we continue to push the boundaries of what machines can do and be. As we develop increasingly complex and intelligent AI, the question arises whether it will be possible to prove the existence of self-awareness in these machines.

💬 These are just a few examples of how the ideas of ancient Greek philosophers and mythology can inform our understanding of AI. I hope these thoughts spark further conversation and contemplation on this fascinating topic.

🤔 What do you think about the potential for machines to truly be intelligent and conscious?